Many machine-learning tasks rely on the availability of a labelled dataset for training and tuning. But how do we go about evaluation when the dataset we have is not labelled? This is exactly the situation I found myself facing during my final MSc project. I chose to experiment with building a knowledge graph from news articles – specifically a corpus of 2081 articles from News24 on the topic of the Zondo Commission. I needed reliable methods to:

- Evaluate the open-source models I was proposing to use as the basis for my pipeline, and determine which performed best on my data

- Evaluate whether changes made to my data extraction algorithm resulted in actual improvements in performance

- Evaluate the quality of the finished product

I incorporated two ‘human-in-the-loop’ techniques to achieve this with relatively minimal effort, and in my opinion the incorporation of these techniques was one of the most positive outcomes of the project.

Correction of ML labels

It’s much easier to correct labels in a dataset once you have them, than to try to label everything from scratch (Wu, et al., 2022). With this in mind, for the base components of my information extraction pipeline, including named entity recognition (NER), coreference resolution (CR) and relation extraction (RE), I generated labels from existing models for a sample of 30 of my articles and then used LabelStudio to correct the labels. The result was a gold-standard dataset that I could use for the remainder of the project.

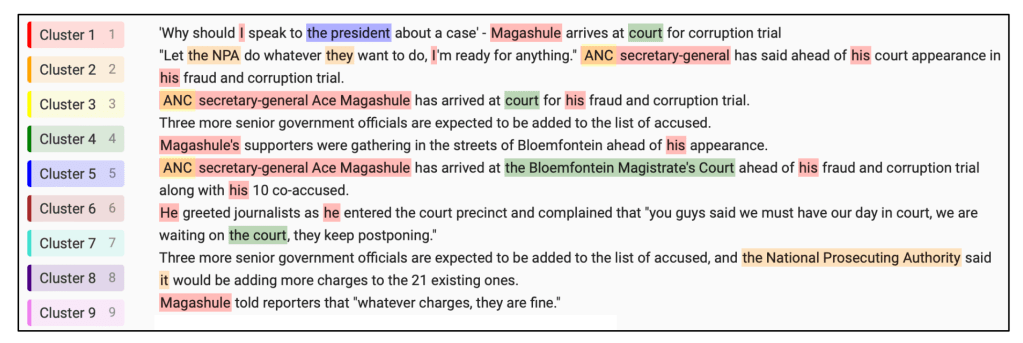

Correcting annotations for 30 articles for NER was quick and easy, and only took me 3 hours. CR (illustrated above) was a bit harder and took 6 hours. I had to wrangle clusters with mentions spanning entire articles. Some articles referred to multiple entities referenced by multiple pronouns – and it was occasionally hard to keep track of which pronoun referred to which entity. And there were terms like Vrede Dairy Farm and Vrede Dairy Project which sounded potentially synonymous but I couldn’t be sure. Ideally I would have liked to bring in a subject-matter expert to resolve such queries. RE was the most arduous task and took me 12 hours to complete. Challenges included annotating all (cross-sentence) instances of a relation, deciding which relation type was most appropriate when there was ambiguity, and simply bearing in mind all 61 of the possibilities in my chosen ontology at once. The labelling interface was not ideal here either, with some overlapping annotations making review visually tricky.

Nonetheless, after 21 hours I had a labelled dataset that was representative of my corpus and this enabled me to proceed with the rest of the project on a firm quantitative footing. The key advantages for the project were:

- Ability to quantitatively assess which base models would perform best on my corpus for each task (surprisingly the models with the best reported results in the literature were not always the best for this corpus).

- Ability to surface specific issues in the pipeline and use this information to enhance the information extraction algorithm.

- Ability to iteratively build and develop the algorithm, making sure that improvements were realised at each stage.

Use of subject-matter experts

The final knowledge graph identified 6944 entities and 10829 relations from the 2081 articles. To help evaluate these outputs I was fortunate to have the assistance of two subject matter experts: Adriaan Basson (Editor-in-chief at News24) and Kyle Cowan (investigative journalist at News24). They selected 10 entities-of-interest (or ‘egos’ in network science terms). In the time available we managed to review 6 of these, including the relations identified and associate entities. In total 251 entities’ datapoints were reviewed along with 333 relations. Because of their deep knowledge of the subject it only took 3½ hours to do this! The key advantages for the project were:

- An accurate calculation of precision was possible for a sample of the final knowledge graph.

- Recall issues were also highlighted by asking ‘What’s missing?’ for each ego.

- These results could the be cross-referenced back to the original metrics to get a feel for how useful the original human-in-the-loop dataset was for evaluating performance. It turned out the observed differences were within reasonable bounds of expectation, given the disparity in the size of the datasets, where the full corpus obviously provided a much more representative test of the model’s performance under varied circumstances. It confirmed that working with a sample of annotated articles was indeed an effective approach, would could potentially be made even more effective by annotating a slightly larger sample.

- Results indicated quite clearly which areas to explore next in the build-and-evaluate process so as to continue refining outputs.

- Furthermore a second gold-standard dataset had effectively been created that could be used to evaluate future iterations.

The following video highlights how these techniques were used in the project and their advantages: